Although AI can serve as a tool that helps with fact-checking to some extent, it also has limitations and weaknesses when verifying content, whether still images, videos, or text. Citing AI-generated answers requires caution, as it may turn into selectively seeking evidence to support one’s existing beliefs or confirmation bias.

What are the limitations of AI in fact-checking? Cofact analyzes this issue in the article by using the case of fact-checking images of Pukkamon Nunarnan, a member of parliament from the People’s Party, assisting flood victims in Hat Yai as a case study.

Full report

Social media users jointly examined images of Phakamon Nunanan, a party-list Member of Parliament from the People’s Party, while she was assisting flood victims in Hat Yai District, Songkhla Province, in late November 2025. This came after allegations circulated online claiming that the floodwater level was not actually as high as it appeared in the images.

Although various pieces of evidence taken together—such as images from Google Street View and on-site video footage recorded by a homeowner living near the flooded area—were able to confirm that the location where Phakamon, also known as “MP Lisa,” was assisting flood victims was indeed deeply flooded, Surawich Verawan, a senior journalist and columnist, reached a different conclusion by citing AI-based image analysis.

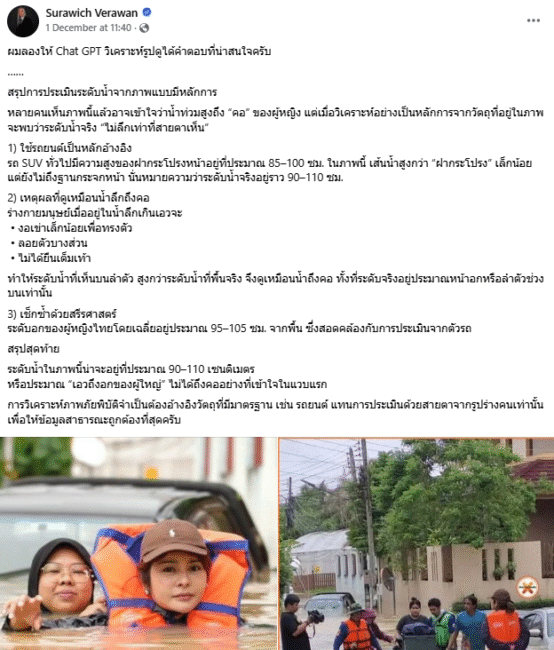

Surawich posted on his Facebook account “Surawich Verawan” on 1 December 2025, stating that if AI were used to analyze the image “in a principled manner,” it would be found that “the actual water level was not as deep as it appeared to the naked eye.” The post received more than 3,100 likes and over 180 shares (as of 8 December).

While AI can serve as one of the tools that may assist in fact-checking to some extent, fact-checkers argue that AI has limitations and weaknesses when it comes to verifying content—whether still images, videos, or text. Relying on AI-generated answers, therefore, requires caution, as it may amount to seeking evidence that merely reinforces one’s pre-existing beliefs or confirmation bias.

What are the limitations of AI in fact-checking? Cofact analyzes this question in this article, using the case of images showing MP Lisa assisting flood victims as a case study.

The Origin of the Images

Several provinces in southern Thailand experienced severe flooding, particularly in Songkhla Province, starting on 17 November and lasting for more than two weeks. This resulted in 229 deaths out of a total of 267 nationwide (according to a Thai PBS report citing the Ministry of Public Health as of 2 December 2025).

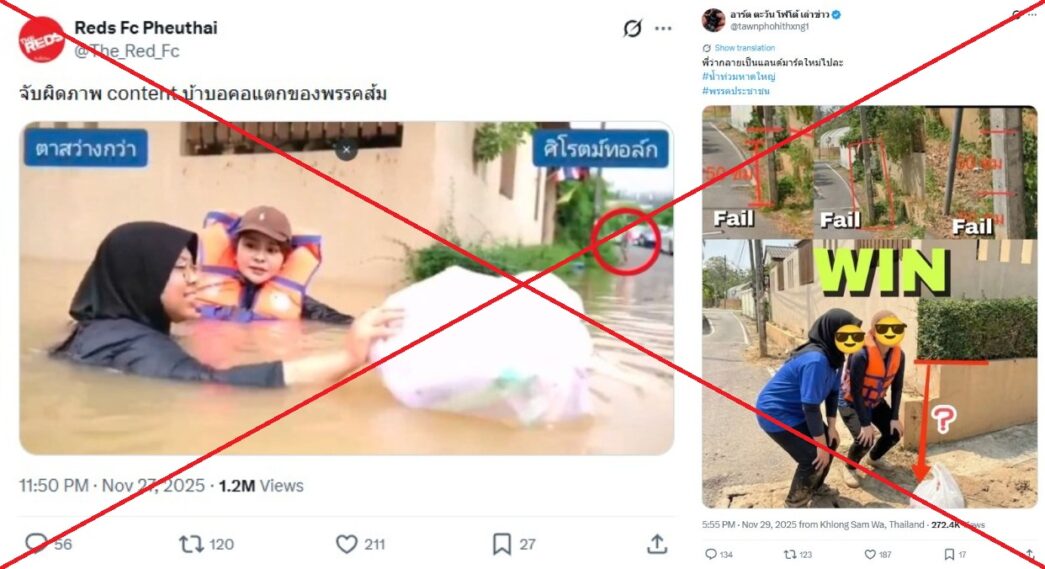

Lisa was one of the MPs who went into the affected areas to assist flood victims. However, she became a target of online attacks accusing her of staging a photo opportunity rather than genuinely helping the public. Some users took screenshots from a video clip posted on the X account of Sirote Klampaiboon, a news anchor and political analyst. The clip showed MP Lisa wearing a life jacket and supporting herself in chest-deep floodwater while assisting residents in Soi 17/1, Kanchanawanit Road, Hat Yai District, Songkhla Province, on 25 November.

These users attempted to “debunk” the scene by claiming that the water level at that location was not high enough to require a life jacket or to be “neck-deep,” because in a connecting alley, a man could be seen standing without floodwater around him.

In addition, AI-generated images were circulated, alleging that the water appeared deep only because MP Lisa deliberately bent her knees to make the situation look more severe than it actually was.

Examples of content attacking Phakamon, an MP from the People’s Party, accusing her of staging images to make the flooding appear deeper than it really was.

Using ChatGPT to Analyze the Image

Surawich, a columnist affiliated with the Manager Media Group, joined the fact-checking effort by using an AI tool—ChatGPT—to analyze images of MP Lisa during her flood relief work. He concluded that:

“Many people who see this image may think that the floodwater reaches the woman’s ‘neck.’ However, when analyzed in a principled way using objects in the image as reference points, it can be found that the actual water level is ‘not as deep as it appears.’”

He further stated in the post that:

“The water level in this image is likely around 90–110 centimeters, approximately waist- to chest-high for an adult, not neck-deep as initially perceived.”

Surawich explained that ChatGPT reached this conclusion based on three factors:

- Using a car as a reference point

A typical SUV has a hood height of approximately 85–100 cm. In the image, the waterline appears slightly above the hood but below the base of the windshield, indicating an actual water depth of around 90–110 cm. - Why does the water appear neck-deep

When people stand in water deeper than waist level, they often bend their knees slightly to maintain balance or partially float, rather than standing fully upright. This can make the water appear to reach the neck, even though the actual level is closer to the chest or upper torso. - Cross-checking with anthropometry

The average chest height of Thai women is approximately 95–105 cm above the ground, which aligns with the estimate derived from the vehicle comparison.

Surawich’s AI-based analysis post was published on 1 December 2025, with additional comparison images added on 2 December.

Limitations of AI in Image Fact-Checking

Although AI capabilities have advanced rapidly over the past few years, significant limitations remain. Using AI for fact-checking, therefore, requires careful consideration. Applying AI to determine floodwater depth from images of MP Lisa involves the following key limitations:

1. AI has limited knowledge and relies solely on the information provided by users

If an AI system is given only one or two images to analyze, it has extremely limited information from which to assess the real situation. AI cannot independently search for geographic coordinates or gather all relevant video clips from websites and social media to support its analysis.

In this case, ChatGPT had no information that the area shown in the clip was a basin-like low point—the lowest section between two sloping roads. It also lacked contextual information about the corner house visible in the clip, which had been elevated at least one meter above road level, as confirmed by video footage and descriptions from nearby residents.

Without this critical context, AI may rely solely on comparing SUV height and average female body proportions, as cited in the post, leading to conclusions that do not reflect reality.

2. AI primarily follows prompts

Surawich did not disclose the exact prompts he used with ChatGPT. However, his Facebook post suggests that he asked the AI to determine whether the water level where MP Lisa was assisting flood victims truly reached neck level, and to analyze whether the depth was as great as it appeared.

Cofact tested ChatGPT using similar prompts along with two still images from Surawich’s post as baseline data. The result was that ChatGPT concluded the water levels “were not the same,” and that the first image “appears to be framed or angled to make the water seem deeper than it actually is.”

However, when additional information was provided—stating that the water level in the image did in fact reach the woman’s neck—ChatGPT then explained that “the previous answer was not wrong, but rather a limitation of assessment based on two still images from different angles.”

Examples showing how AI responses change depending on user prompts and opinions.

This illustrates that AI operates according to the user’s current prompt, as well as references from prior prompts or conversation history. Moreover, AI often avoids admitting “I don’t know” and instead generates potentially incorrect answers to satisfy users. This limitation may stem from how AI models are trained.

Even if AI initially provides a correct response, repeated questioning—such as asking whether the answer is truly correct—may cause the AI to change its answer back and forth depending on how the question is framed. This demonstrates that AI does not always provide factual truth, but may instead produce misleading information that reinforces user bias, especially when prompts contain clear assumptions or preconceived beliefs.

3. Difficulty in verifying the accuracy of AI-generated information

AI can assist in searching, summarizing information, or performing tasks quickly. However, it currently has too many limitations to be relied upon as a primary source or a single definitive conclusion—particularly for fact-checking, where errors remain common.

It is therefore essential that users possess sufficient knowledge and critical thinking skills to verify whether AI-generated information or conclusions are truly accurate before using or sharing them. Otherwise, misinformation or misunderstandings may be spread widely, whether intentionally or unintentionally.